Conference notes: How to Differentiate Yourself as a Bug Bounty Hunter (OWASP Stockholm)

Posted in Conference notes on November 7, 2018

Posted in Conference notes on November 22, 2022

Hi! After a long hiatus, I’m reviving the blog starting with conference notes.

“Mechanizing the Methodology” is a short but excellent talk given by Daniel Miessler at DEFCON 28 Red Team Village.

I watched it way back in 2020 and forgot to share my notes at that time. But it is still very relevant, so it’s worth (re)discovering.

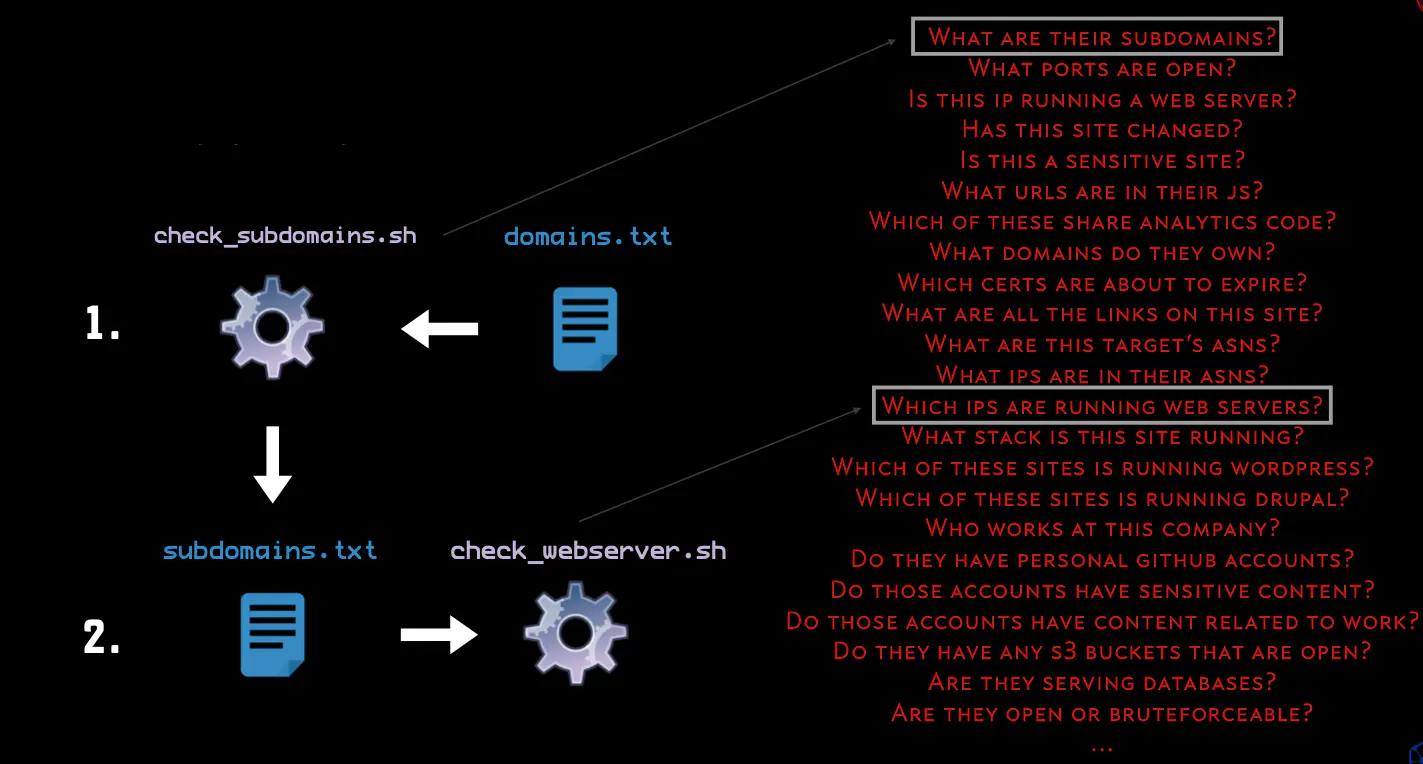

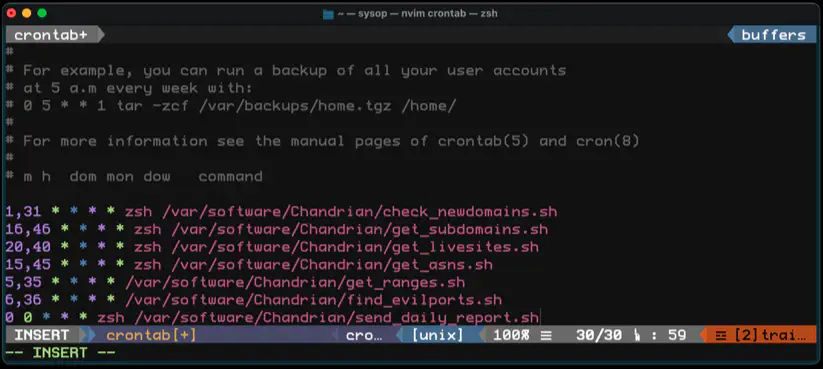

Daniel Miessler shows how to run an automated testing platform on a Linux box, for any kind of testing (pentest or bug bounty):

Why

How

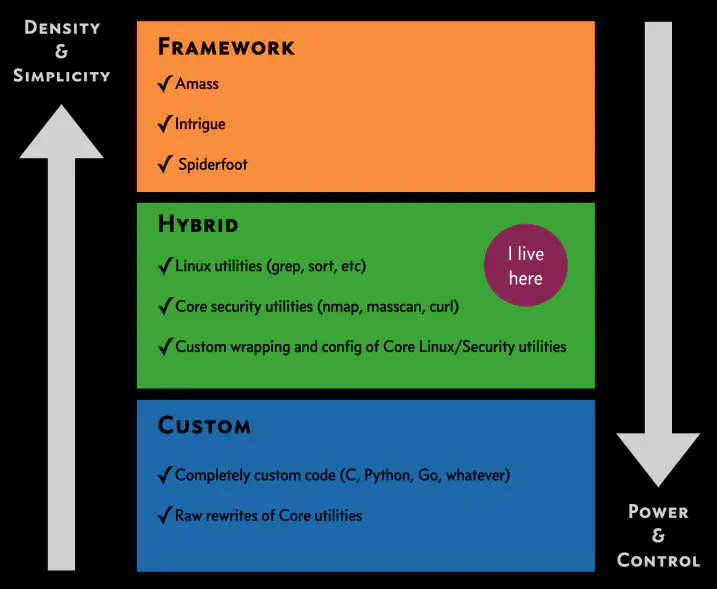

The level of code

Q: For a given IP range, what hosts are alive?

# Return any host that is listening on any of nmap's top 100 ports

# This is the nmap equivalent of `--top-ports 100`

$ masscan --rate 100000 -p7,9,13,21-23,25-26,37,53,79-81,88,106,110-111,113,119,135,139,143-144,179,199,389,427,443-445,465,513-515,543-544,548,554,587,631,646,873,990,993,995,1025-1029,1110,1433,1720,1723,1755,1900,2000-2001,2049,2121,2717,3000,3128,3306,3389,3986,4899,5000,5009,5051,5060,5101,5190,5357,5432,5631,5666,5800,5900,6000-6001,6646,7070,8000,8008-8009,8080-8081,8443,8888,9100,9999-10000,32768,49152-49157 -iL ips.txt | awk '{ print $6 }' | sort -u > live_ips.txt

$ cat live_ips.txt

1.2.3.4

1.2.3.5

1.2.3.6

1.2.3.7

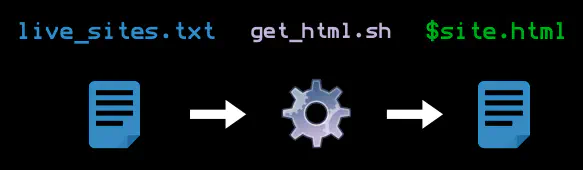

Q: What is the full HTML for a given page?

$ chromium-browser —headless --user-agent='Mozilla/5.0 (Windows NT 10.0;Win64; x64) AppleWebKit/537. 36(KHTML, like Gecko) Chrome/67.0.3396.99 Safari/537.36' --dump-dom$site > $site.html

$site.html for you to parse and inspect:$ cat $site.html

<!DOCTYPE html>

<html lang="en-US"><head><meta charset="UTF-8"><meta name="viewport" content="width=devicewidth, initial-scale=1"> <style media="all">@font-face{font-family:'concourse-t3';src:url(//

danielmiessler.com/wp-content/themes/danielmiessler/fonts/concourse_t3_regular-webfont.woff)

format('woff');font-style:normal;font-weight:400;font-stretch:normal;font-display:fallback} …

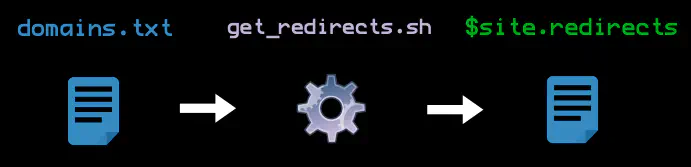

Q: What domains redirect to my domain?

# Uses host.io

$ curl -s "https://host.io/api/domains/redirects/$site?&limit=1000" | jq -r '.domains' |jq '.[]' | tr -d \" >$site.redirects

$ head -5 $site.redirects

teslasmail.com

telsamotors-losangeles.com

teslamotorsantartica.com

telsa-newyork.com

mevg.info

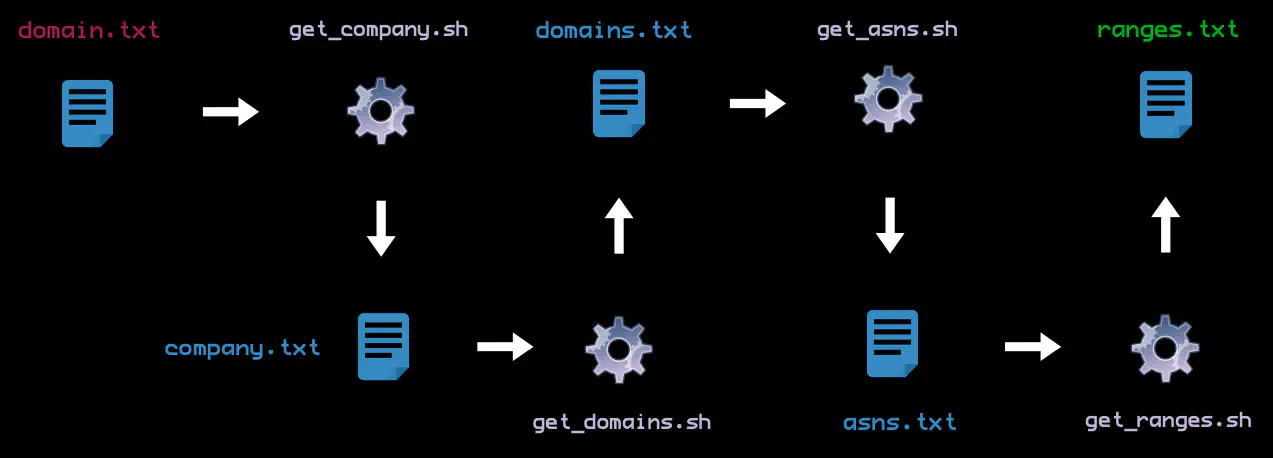

Q.: What IP ranges are associated with these ASNs?

# Uses ipinfo.io

$ curl -s "https://ipinfo.io/AS394161/json/" | jq '.prefixes[].netblock' | tr -d \" > ranges.txt

ranges.txt and can be passed to other testing modules:$ head -5 ranges.txt

192.95.64.0/24

199.120.48.0/24

199.120.49.0/24

199.120.50.0/24

199.66.10.0/24

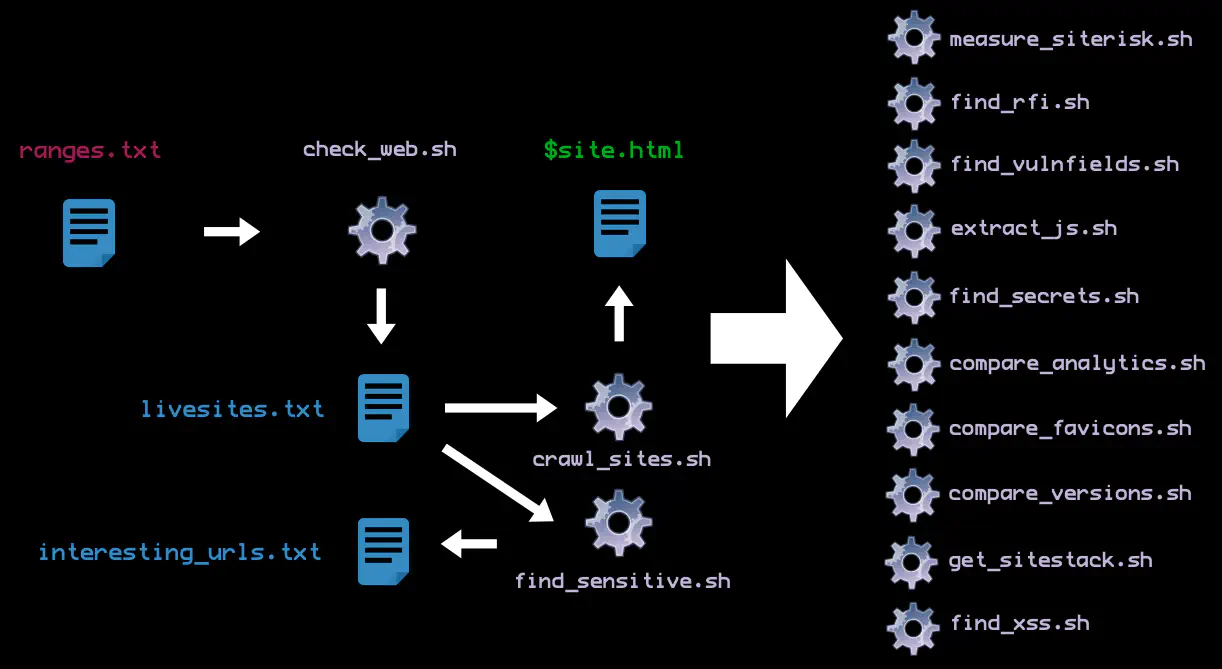

Q: What is the workflow I need to answer a particular question?

Q: What is the workflow I need to answer a particular question?

# Run sSMTP on Amazon SES to send emails

ssmtp "$RECIPIENT" < domain.notification

# Send a message to Slack using an Incoming Webhook

curl X POST -H 'Content-type: application/json' --data '{"text":"Hey, there’s a new yummy (open) PostgresDB @1.2.3.4"}' YOUR_WEBHOOK_URL

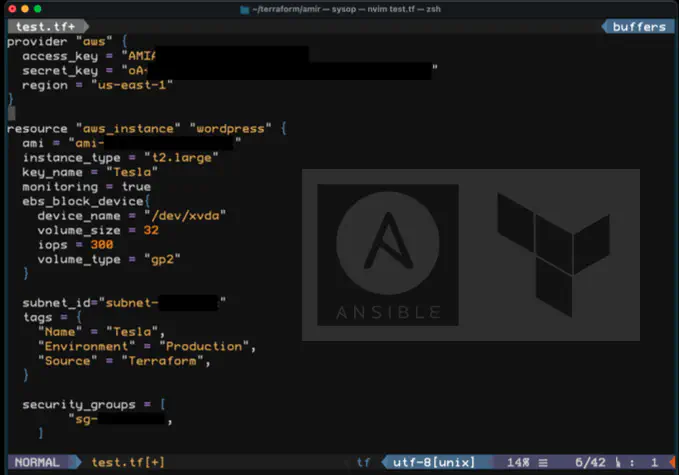

terraform apply and his monitoring and alerting goes live on the Internet